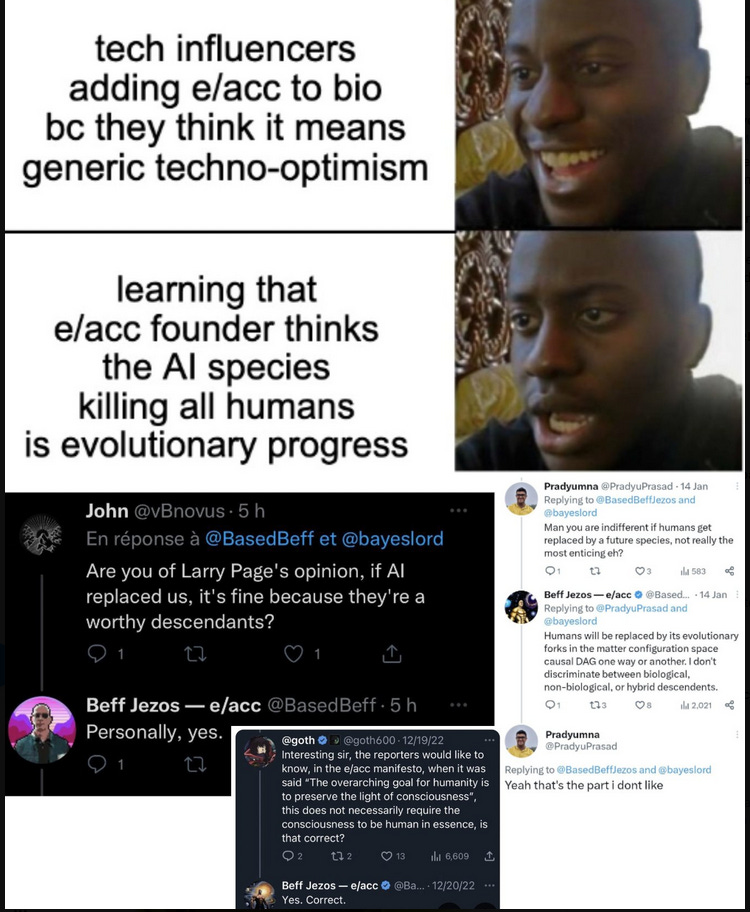

“E/ACC,” as they self-identify, are people involved in AI development who want to accelerate the development AI to reach artificial general intelligence (AGI) or artificial super intelligence (ASI). They do not care at all about AI safety, and they understand it is pretty unlikely that creating an AI as far advanced above humans as humans are above insects works out well for humans. Almost by definition, you cannot control something that is both far smarter than you, and also thinks 10,000 X faster.

The e/acc crowd don’t care about the risks they are running, and they also don’t think anyone else gets a vote.